As software applications become ubiquitous, they must adapt seamlessly to a wide range of devices. Porting complex user interfaces from one platform to another requires a lot of efforts. Thus the dynamic generation of user interfaces for any platforms directly from the code brings immediate and substantial benefits. In this paper, we sketch MORE, a framework that generates user interfaces from a reflective analysis of data and Java code.

Multi-device, VoiceXML, WML, Java, User interface generation.

Software developers spend up to 50% of a whole project timeresources [1] for the design, implementation and tests of friendly intuitive user interfaces (UIs). Despite such a significant work, applications initially developed for a standard Web browser must often be re-implemented from scratch in order to reach new devices such as WAP phones or voice browsers. It would be very useful if a system could dynamically generate a user interface from the analysis of an application logic and data models. Yet development environments are aimed at building applications whose execution contexts are known a priori. For one, the Java programming language is aimed to be platform-independent. Nevertheless at the time it was designed the number of devices able to host a Java Virtual Machine (JVM) was really small. So the motto “Write once run anywhere” is today too optimistic and fails in its literal intent and is meaningful only for specific classes of devices. We designed and implemented a Multichannel Object REnderer (MORE) to address these issues. MORE provides on the one hand deployment schemes for applications complying with the target device features and on the other hand dynamically generates a UI based on the logic of the application which is extracted directly from the Java code.

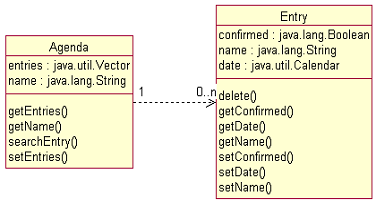

The Model-View-Controller (MVC) strategy (the observer design pattern [2]) decomposes a system in models strictly bound to data and logic (Fig. 1). For each model, one or more VCs may be provided. While it is quite easy to define device independent models, it is hard to define device independent VCs because they must be defined in terms of widgets specific to the device. MORE is inspired from the MVC approach. It transforms at run-time the objects of the models into VCs objects by means of a “rendering process”. An application written for MORE contains a set of classes defining the platform independent “model” for the UI. By means of “Java Reflection”, a model is inspected at run-time to identify its data (fields) and the actions (methods) the user has access to. Using this information and the target device profile, a concrete VC can be built. For each field, a run-time editor is placed in the VC. Basic data objects (strings, dates, collections, etc.) have predefined run-time editor. Composite data objects are represented by links to other VCs built by applying the rendering process recursively. For each method, a widget that triggers the method, e.g. a button, is inserted in the VC.

Deployment is also an issue. MORE classifies devices in fat, thin, and web-like clients. For each category, there is a rendering engine which is first loaded and then creates the VCs from the reflective description of the model. In this way the same model can be used from any channel for which is available a rendering engine.

Fat clients are devices with high processing power and reliable fast Internet connection able to download and run mobile code, e.g. PCs. MORE instantiates and assembles Java (Swing) UI components to form a Window, Icon, Menu, Pointing device UI.

Thin clients have limited processing power and low bandwidth connection. They can run custom applications but not mobile code. The run-time generation of UIs relies on a two-tiered architecture. A local tier is a component pre-installed on the user’s device. A remote tier acts as a proxy between the model and the device. The local tiers receives through the remote tiers an XML representation of the model generated by MORE. The local tiers parses it, and generates a UI on-the-fly. MORE have local-tiers for interactive TVs, PDAs, and smart phones.

Web-like clients are equipped with a 3rd party browser, e.g. WAP phones or voice browsers. We have identified three main tag-based clients: Web, WAP and voice, based on HTML, WML and VoiceXML respectively. Communication model between MORE engine and third-party browser is based on HTTP and the MORE engine is deployed as a Java servlet. MORE creates an XML representation of the model which is converted into a suitable language for the current device through an XSLT processor. The XSLT processor inputs are the XML document and a set of XSL style sheets tailored for the current device profile.

MORE is able to adapt the VC both for “high-end” HTML clients (Fig. 2) with full Javascript and Cascading Style Sheet (CSS) support, and for “low-end” HTML clients with limited Javascript and no CSS support, e.g. old browsers or mini browsers bundled with PDAs. Multimedia data are managed according to their MIME type. For audio and video data, a link in the UI allows the user to request the resource. For images, MORE processes a size transformation to better fit the actual screen capabilities.

The voice modality presents some peculiar issues related to the translation of Java objects to a speech-based UI. A “GrammarConformer” component modifies field and method names to avoid difficulties to understand speech-generated words and to insert them in grammars generated on-the-fly by the XSLT processor following the JSGF format. Regarding collections and arrays, the vocal representation of such data types may cause a flood of words pronounced by the TTS if they contain many items. To address it, MORE splits arrays and collections in groups of nine items, thus exploiting the DTMF keypad ( ‘1’ to ‘9’ digits). The remaining keys are used to move backward, forward and to stop browsing the collection. The structure of each generated VoiceXML page is built by a set of forms with only a master and various slaves. The master form contains the list of the names of fields and methods of the object. When a user browses a field or invokes a method, the VoiceXML processor jumps to the slave form able to manage it. If the code of a slave form does not require any interaction with the server, the VoiceXML system restarts from the master form.

WAP-enabled phones (Fig. 3) and “low-end” HTML clients have common features, so most of the issues have been addressed similarly except for audio/video contents that WAP devices cannot process. Images are converted to the WBMP format.

MORE generates user interfaces from the reflective analysis of Java code. The resulting object-oriented model is translated into a concrete UI according to features of the target device. To-date, there are eight different implementations of the rendering engine and four of them work on web. Furthermore MORE can easily support new devices categories and new user defined data types. We are working on a way to get a improved UI customization. It makes “design once, display anywhere” closer to reality for any device. MORE has been adopted in the e-MATE project [3].